Before we get into the specifics of how to sadistically abuse Tableau, let's clear the air: there's something about inaccessible, expensive, proprietary enterprise software that tends to put me in a touchy mood. As we know, B2B software pricing has nothing to do with code quality or even value-add, but rather the tendency of businesses to create time-based urgencies without warning; the kinds of urgencies which may be solved by, say, a tool of sorts.

My first interaction with Tableau actually took place after I had committed myself to the cult of Python's Pandas library and all that comes with it. Tableau does little to hide the fact that it is a GUI for data manipulation and SQL queries; in most cases, the calculation syntax is exactly the same. From my perspective, Tableau could be a tool to save time: instead of rewriting variations of the same scripts over and over, I could use Tableau to do these tasks visually for both speed and transparency's sake. It was a win-win for trivial tasks, except for one: the ability to write back to a database. You'd think I wouldn't think that far ahead before purchasing my own Tableau server and license, conveniently billed upfront annually.

The Rise of ETL

The presence of ETL as an acronym is a perfect reflection of where we are in data engineering's growth trajectory. The lack of effective Extract, Transform, and Load workflow products tell us a couple things: we have too many data sources (whether they be APIs or private data sets), and the raw data is virtually unusable until cleaned. This process could be relatively trivial with the right software. There are plenty of contenders to make this process simple, and I'd like to express in unadulterated astonishment that they are all failing miserably at solving this task effectively, mostly thanks to poor decision making and human error alone.

The ETL Market

As it stands, Parabola.io tops my list of ETL products. Parabola hits the nail on the head when it comes to UI and ease of use. This begs the question: why, then, are their latest releases focused on support for extraction to garbage products like Smartsheet? Currently the only extract location which is actually a database is MySQL. As much as I want Parabola to succeed, nothing has improved if our workflow still involves manually setting up a third party DB with a schema which perfectly matches our output.

Google Cloud is doing its best to somehow tie separate products together such as Dataprep and Bigquery. We'll see how that goes- there's no mention of data extraction from APIs in this flow just yet. We might be waiting for some time for Google's perfect answer to mature.

Github Labs supposedly just announced recent efforts to tackle this space as well with the upcoming Melatano. Hopefully they have their heads on straight.

Anyway, since the world has failed us, we'll just exploit a Tableau backdoor to do this while humanity catches up.

Tableau's Rest API

As hard as Tableau tries to obfuscate anything and everything, their REST API gets us exactly what we want after a bit of red tape. We need to run 3 API calls:

- POST /api/[api-version]/auth/signin: Generate a token so we can actually use the API

- GET /api/3.0/sites/[site-id]/views: List all view metadata in a Tableau "site."

- GET /api/3.0/sites/[site-id]/views/[view-id]/data: Receive a comma delimitated response of the content of your target view

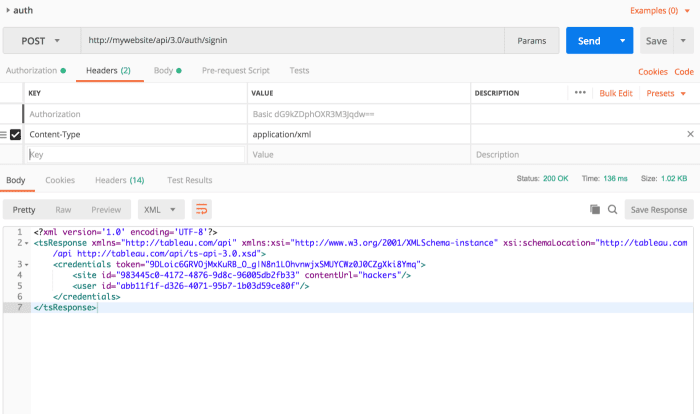

What R U Token about

To receive our token, we'll use basic auth to hit this simple endpoint via POST:

POST https://mysite.com/api/3.0/auth/signinThe response will come in the form of XML and give us two critical items: our token, and our site ID:

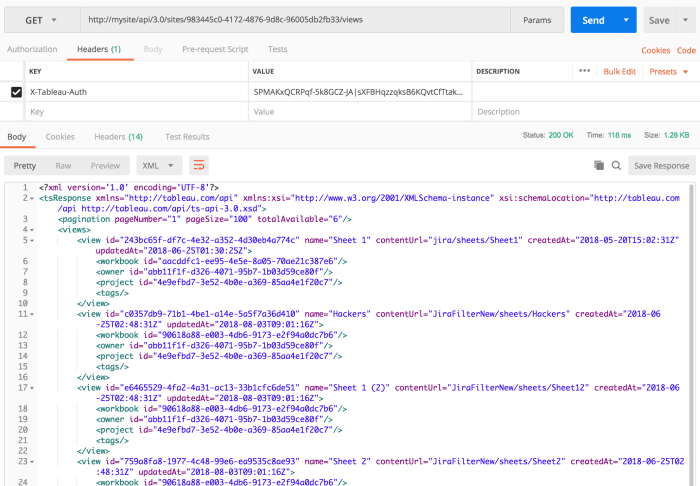

List Views by Site

Next up we're GETing the following endpoint:

https://mysite.com/api/3.0/sites/543fc0-4123572-483276-9345d8c-96005d532b2fb33/viewsNote that Tableau asks for the site ID from the previous response to be part of the URL string.

We'll also need to set headers, so do that.

X-Tableau-Auth: SPMJsdfgHIDUFihdwPqf-5k8GCZJA|sXhFBHzzqksB6K567fsQvtCfTtakqrJLuQ6Cf

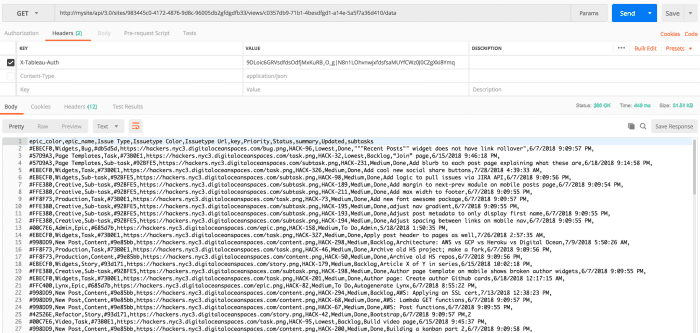

Reap your Reward

Pick the notebook ID you're looking to extract data from. Chose wisely. Your time now. Enter that view into the final endpoint URL:

https://mysite.com/api/3.0/sites/983445c0-4172-4876-9d8c-96005db2gfdgdfb33/views/c0357db9-71b1-4besdfgd1-a14e-5a5f7a36d410/data

Well, well, well.

So now you know how to generate a Tableau REST API token at will. You also know all your view IDs, and how to extract the data from any of those views in a friendly CSV format which happens to play nice with databases. There's a Pandas script waiting to be written here somewhere.

At this point, you know have all the tools you need to automate the systematic pillaging of your Tableau Server data. Take a brief moment to remember the days when Tableau would wave their flags through the countryside as a sign of taunting warfare. They've collected your company's checks and gave you iFrames in return.

Go onwards my brethren. For one chance, discover the lands of Plot.ly and D3 as Free Men. They may take our paychecks, but they will never take our data.