Last time we became familiar with the handiwork of setting up MySQL locally, navigating databases via command line, and exposing your database to external access. While badass, it has come to my attention that most people don't bother doing things this way. Unless you're getting deep into some heavy architecture, most people opt to use cloud services such as AWS to set up databases that are intended to be interacted with by multiple services.

A perfect example is one we ran into over the weekend while working on this very blog. We're running a Ghost instance, which is a respectably complex production-ready app. For a bunch of guys just looking to make some stupid blog widgets, it became obvious that reverse engineering the undocumented inner workings of an open-source node app was a rabbit hole of complexity.

Building on AWS

In our case, AWS is useful for enforcing the separation of concerns. Instead of building new logic into a live app, we can build that logic elsewhere in a reusable way across multiple apps.

The end goal here is to read/write to a database. That said, there's still a fair amount of complexity involved. We'll need to leverage the following AWS services:

- RDS (Relational Database Service): A cloud-hosted database

- API Gateway: An interface for building APIs

- Lambda: The necessary serverless connector between RDS and Gateway

- IAM: Amazon's god-awful user and policy manager

For now, all we're going to worry about is RDS.

Data is the New Bitcoin is the New Oil is the New Gold

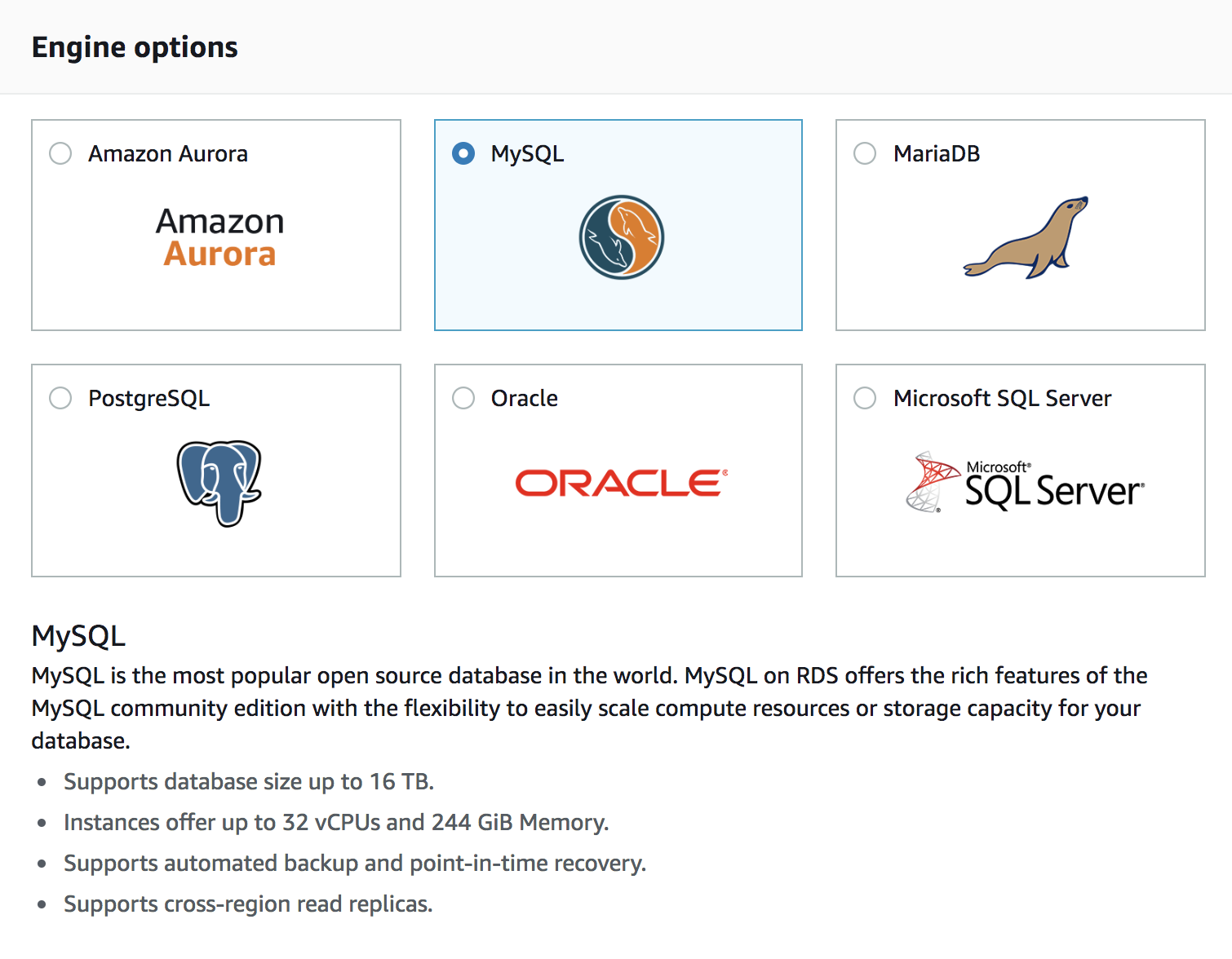

Head to the AWS console and create a new RDS instance. Once prompted, go with MySQL:

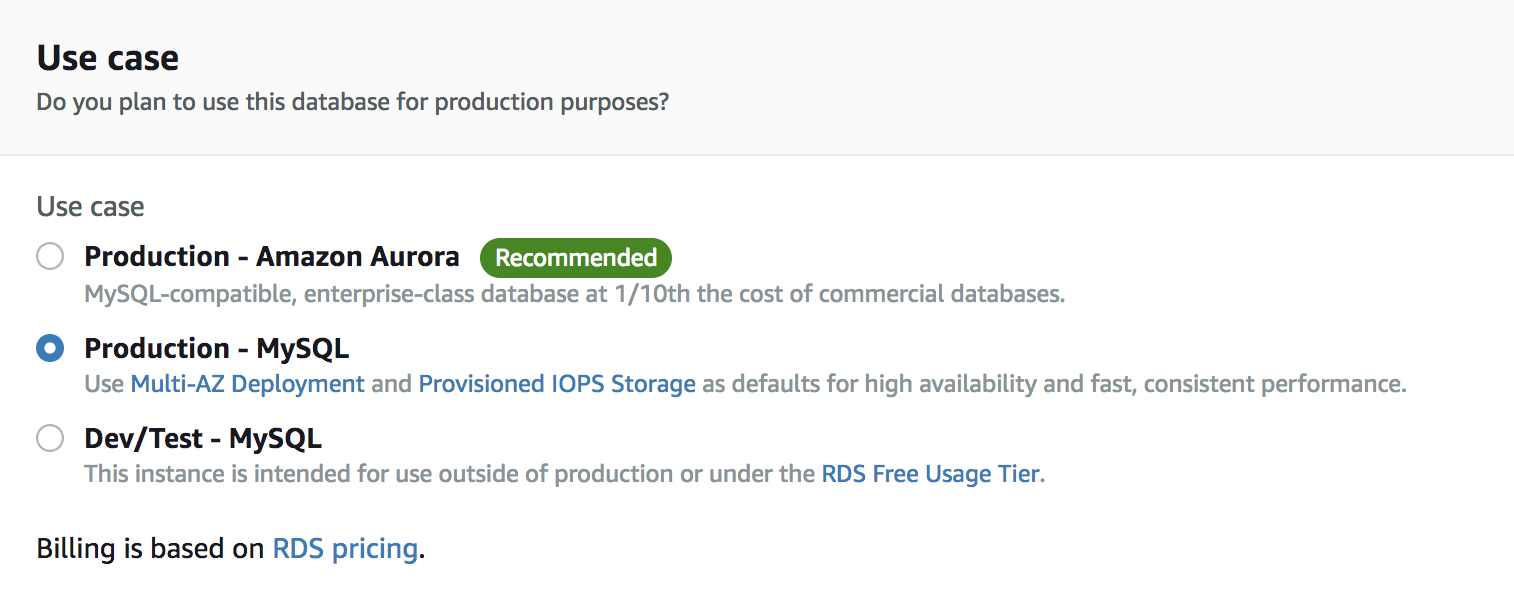

Stick with MySQL Production on the next screen.

Configuration Settings

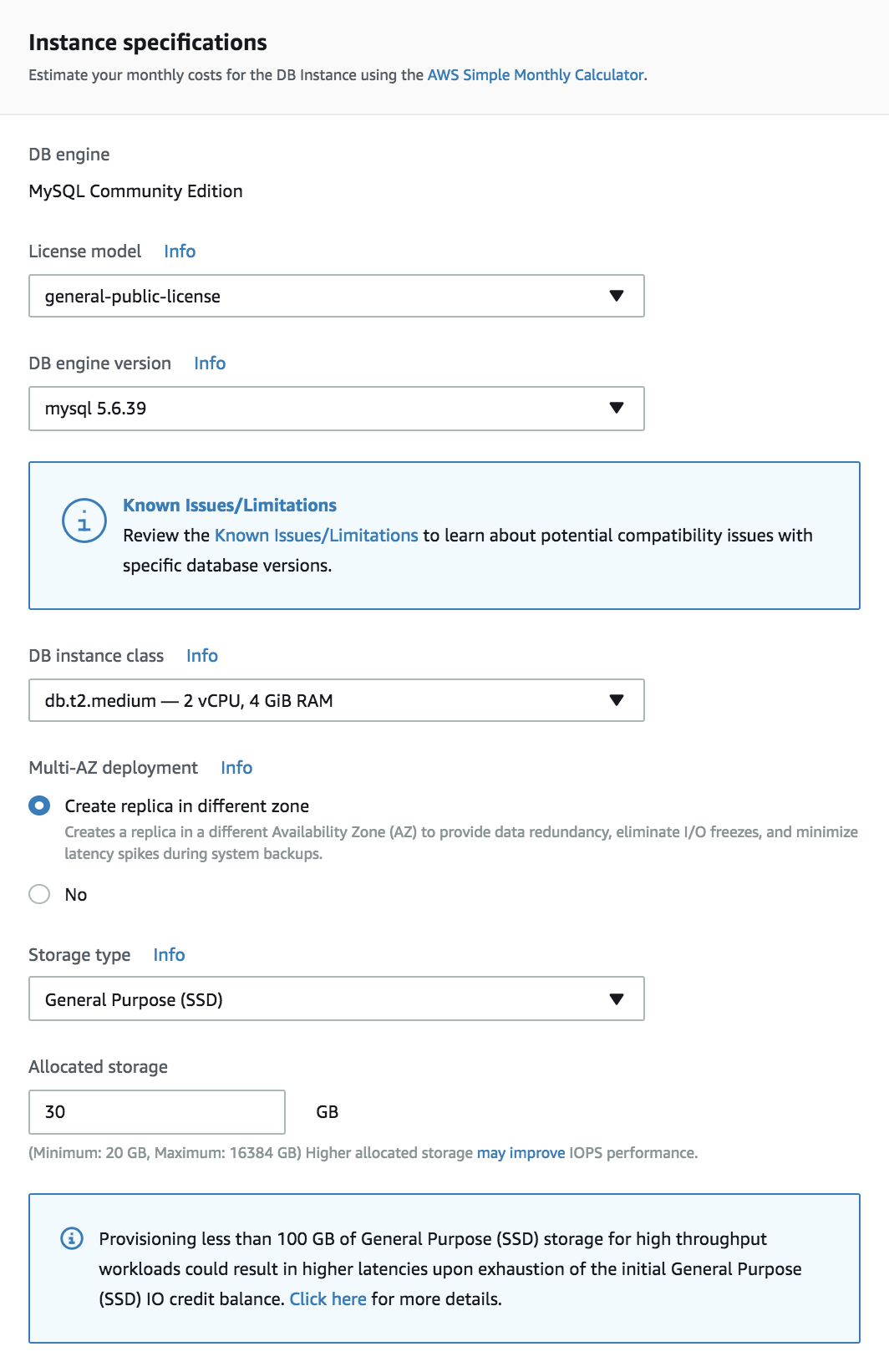

This is where we set our configurations. You'll notice immediately how convoluted AWS tends to be with its naming conventions. I hate how intentionally unintuitive all of AWS tends to be (what the hell is a db.t2.medium)? This sort of absurdity is just something we need to deal with forever. Amazon is technically outside the realm of enforceable Monopoly laws, and there's no reason to believe their reign of mediocre products and talking robots will ever end.

- License: Select general-public-license

- Version: Any version will do, but I'd recommend staying recent.

- Instance class: Most of these instances are huge and unnecessary. Go with something small: I would also advise looking at the pricing plan.

- Multi AZ: Unless you're running a large-scale enterprise, creating DB replicas is probably overkill. Save money and select "no."

- Storage type: Select General Purpose.

- Allocated storage: Feel free to size your database to your needs, but keep cost in mind.

- Publicly Accessible: True.

Once your preferred configuration is complete, it takes time for the database to be created. While we wait, let's move on to creating a user to access this. We can do this with IAM: another AWS product with an even more terrible interface.

Access

Fair warning: user roles and permissions are the worst part of AWS. I could write an entire series on how deep this mess of a scheme goes, but I still barely understand what I'm doing most of the time.

Creating a User

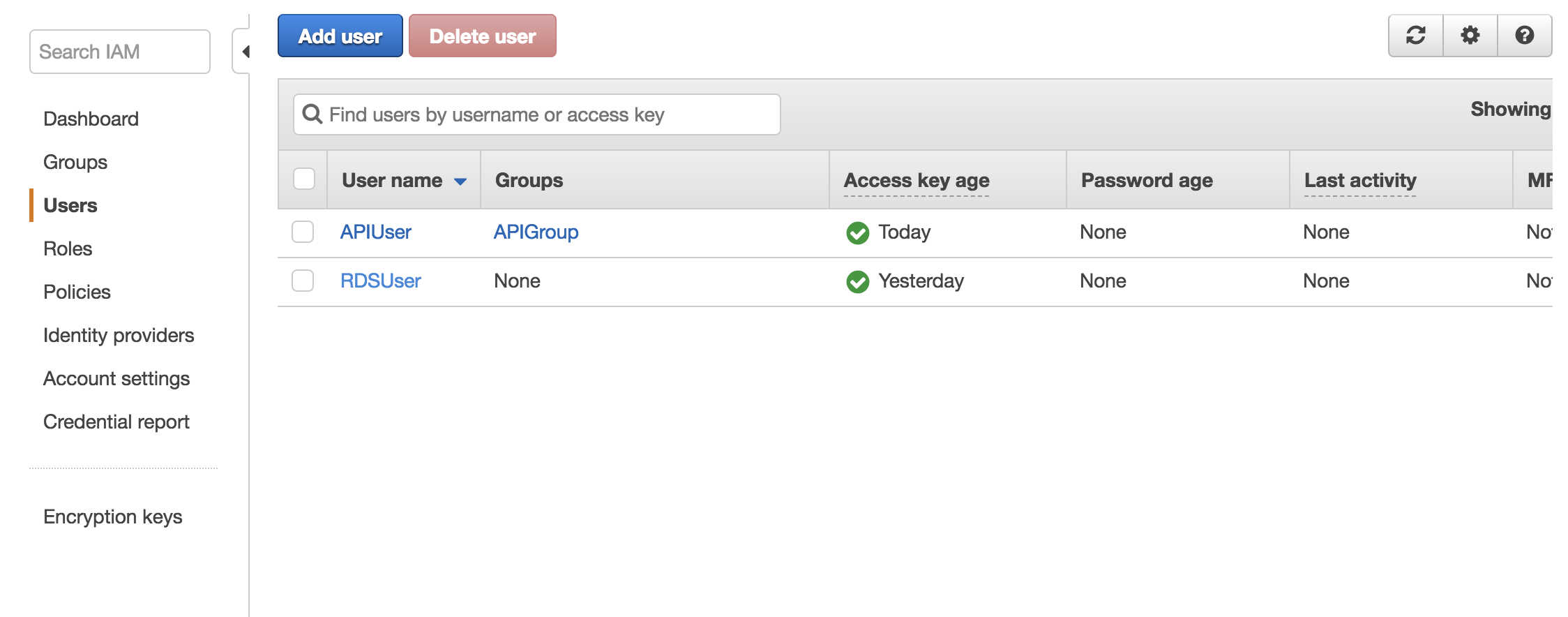

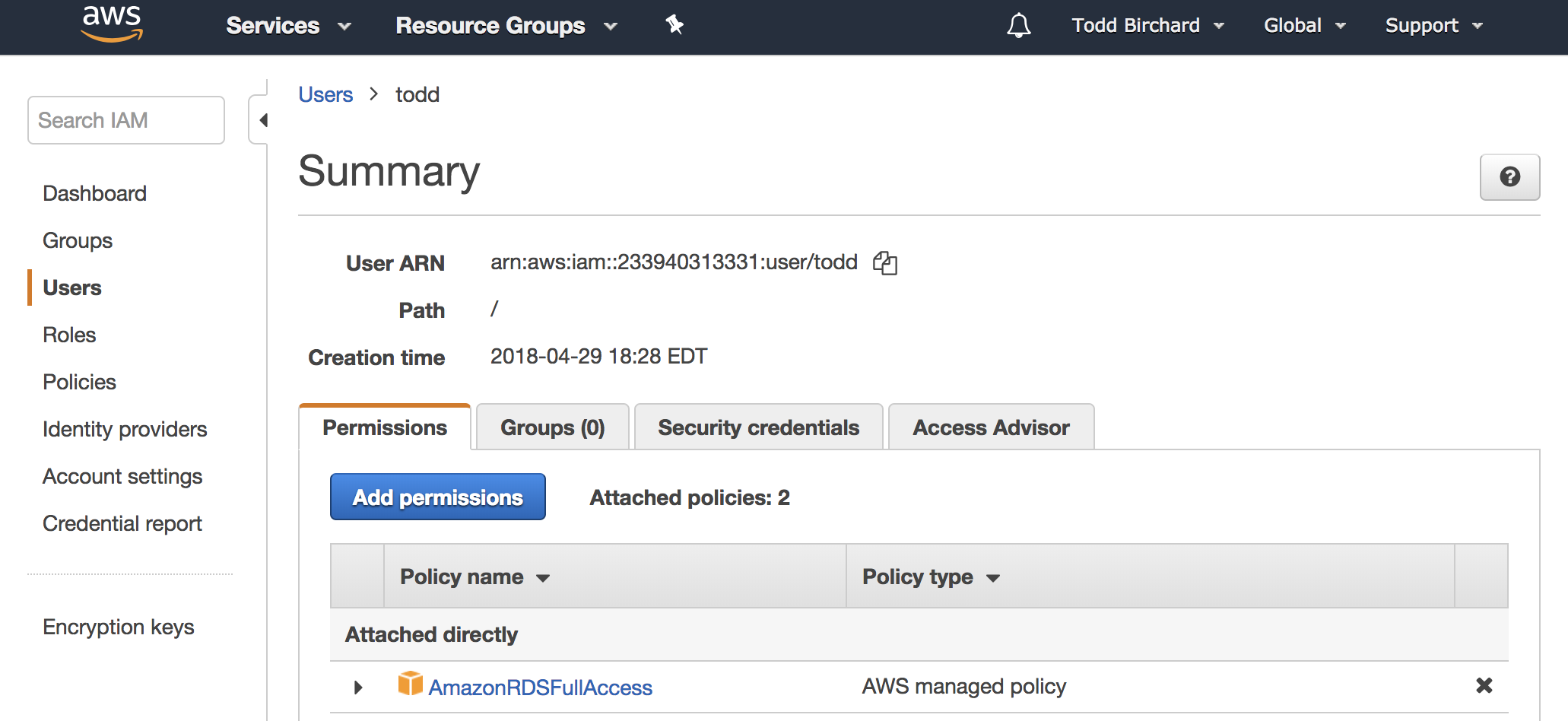

Create a new user that will access the database. Go to the Users panel and create a user:

Modifying permission policies

Permissions work by "attaching" existing "policies" to users, groups, etc. AWS has some default policies that we can leverage for our purposes, so this should luckily be somewhat straightforward.

Policies can also be combined, so users have multiple policies across AWS products.

Native Client

Once your DB pops up in AWS, we will need to get you a GUI to modify your DB. Don't even try to be a hotshot by setting up all your tables via command line. It sucks, it's slower, and nobody is impressed. Don't bother downloading the AWS CLI either. Do not pass GO. Do not collect 500 dollars.

If you need to install MySQL locally, an OSX download can be found here. Come to think of it, that step was probably unnecessary. I'm not sure why I did that.

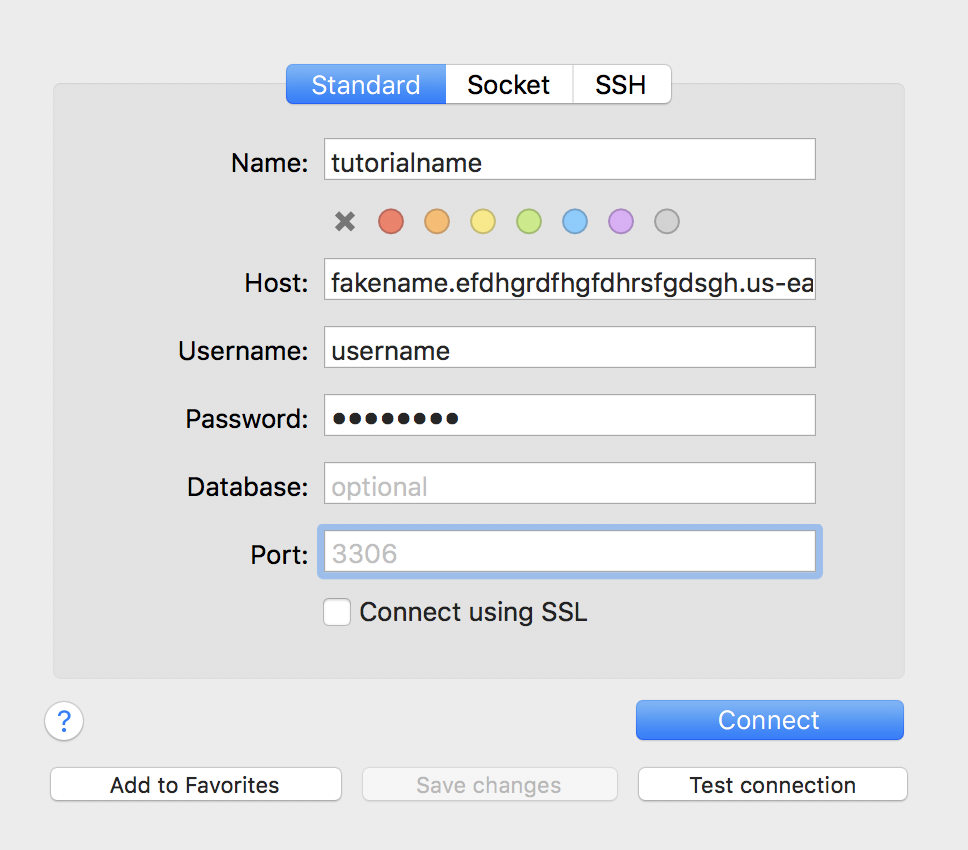

I settled on Sequel Pro as a client. It's good enough, and their logo looks like pancakes. That's enough credibility for me.

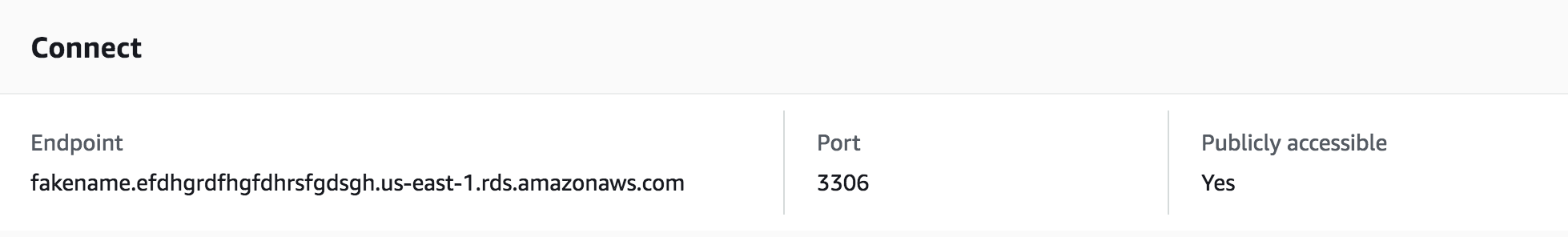

To connect to your database, you'll need to retrieve the endpoint and port number from your RDS console:

Connect to that ish:

Hopefully, everything went well! If not, I'm sure the problem will be a quick and easy fix. Surely it won't involve mindlessly swapping permissions for an entire day. You definitely won't somehow end up corrupting your .bash_profile, making Python invisible to your OS, and effectively destroying your computer. Only an idiot would do something like that. Yesterday evening.

Go ahead and get accustomed to the UI of Sequel Pro - it's pretty straightforward, and ten thousand million times less effort than creating tables via terminal. Create columns under the "structure" tab - the terminology should immediately seem familiar if you've been following the series until this point.

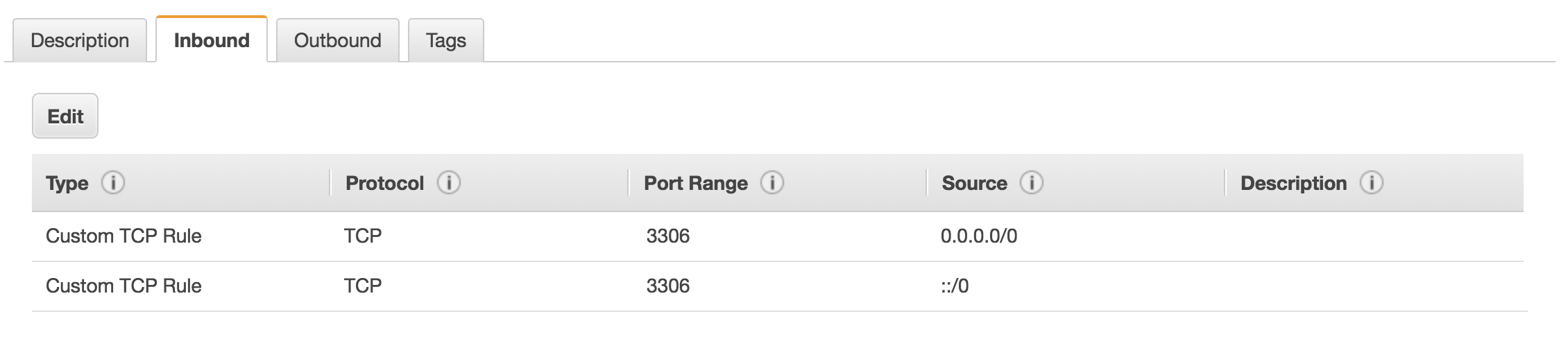

Configure your Security Groups

If you're running into an issue connecting to your DB externally, I happened to run into a nice little issue the other day with security groups. RDS instances limit what kinds of connections they accept via "security groups." This is yet another layer of AWS security hassle where you'll need to specify which hosts are permitted to access your DB, by type of connection, port range, etc.

If you'd like to get this over with as soon as possible, this configuration will open you up to the entire world:

Happy Trails

Next time we're going to sink deeper into this rabbit hole by exploring the wonderful world of serverless functions. Setting up AWS Lambda will allow us to configure endpoints which will allow us to read and write data to our brand-new table in the sky.

We'll still need to get into API Gateway after that, but let's not think about that just yet. Let's not address the absurd amount of time and effort we're about to spend to make a god damn widget that shows Github commits.